Why Generate Code Anyway?

I’ve been recently imagining a convenient discussion opponent (let’s call them Jerraphor), challenging our studio’s decision, in the current vast world of possibilites, to go towards code generation in particular. This post will proceed to argue against their well-crafted, totally-not-conveniently-simplified points.

This post focuses on how technology can enable new kinds of games, rather than how it will affect the pre-established pipelines for game development.

I’ve been recently imagining a convenient discussion opponent (let’s call them Jerraphor), challenging our studio’s decision to, in the current vast world of possibilites, go towards code generation in particular. This post will proceed to argue against their well-crafted, totally-not-conveniently-simplified points.

Jerraphor: We’ve been seeing lots of examples of generating textures and visuals using diffusion models, and doing this on-the-go should give us infinite game variations, right?

An infinite amount is correct, and yet we’re certain that will get old quickly. The concept of “reskinning existing games” has been a topic of conversation in gaming for a long while, and with good reason. Ignoring the problem of the blatant plagiarism this reskinning enables, it allows the original developer of a game to add, for example, seasonality to their game through festive makeovers. While that may bring value to an existing audience, it almost always lacks substance, and trying to sell this variant as an independent game puts players off like nothing else. While visuals may draw people in, what players stick around for are the game mechanics, and you cannot vary those purely through a new visual style.

So, then, rather than focusing on how things look (visuals), let’s dive into how they interact with each other (mechanics).

Jerraphor: Okay, I can buy that visuals alone aren’t enough. But what about those intelligent NPC demos? They’re really impressive!

Now we’re getting into the meat of it. Like for many others, seeing NPCs that respond like humans, in real time, has been a dream since I was a kid. Those initial few minutes where you feel like you’re truly connecting to a character feel magical.

But then the conversation ends and what inevitably sets in is the lack of consequences of that interaction. We go back to the same game world, as if that conversation had never happened in the first place. Some recent games have cleverly managed to hide this fact by discretizing these interaction episodes, or by letting the conversation affect the world in very predetermined ways, but once the novelty of this mechanic wears off, the lack of integration depth becomes very apparent.

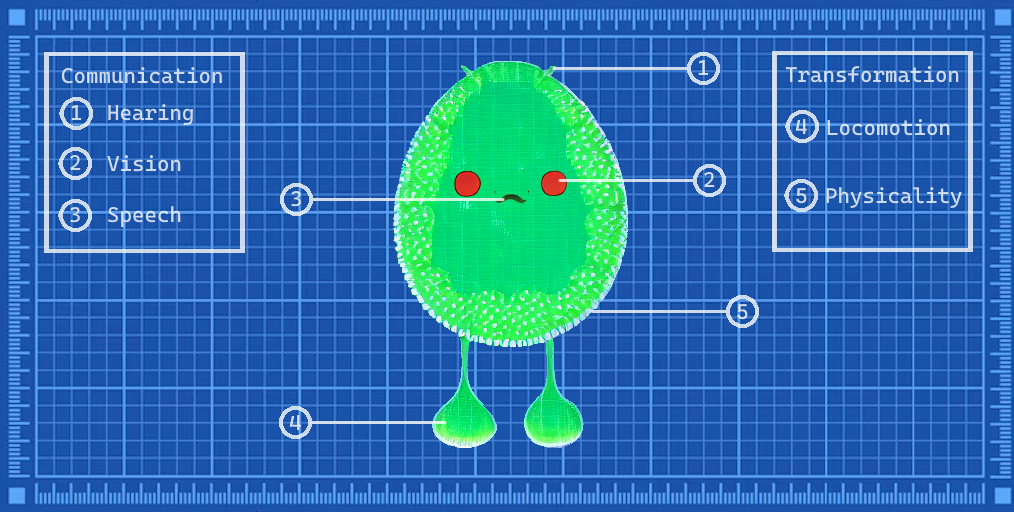

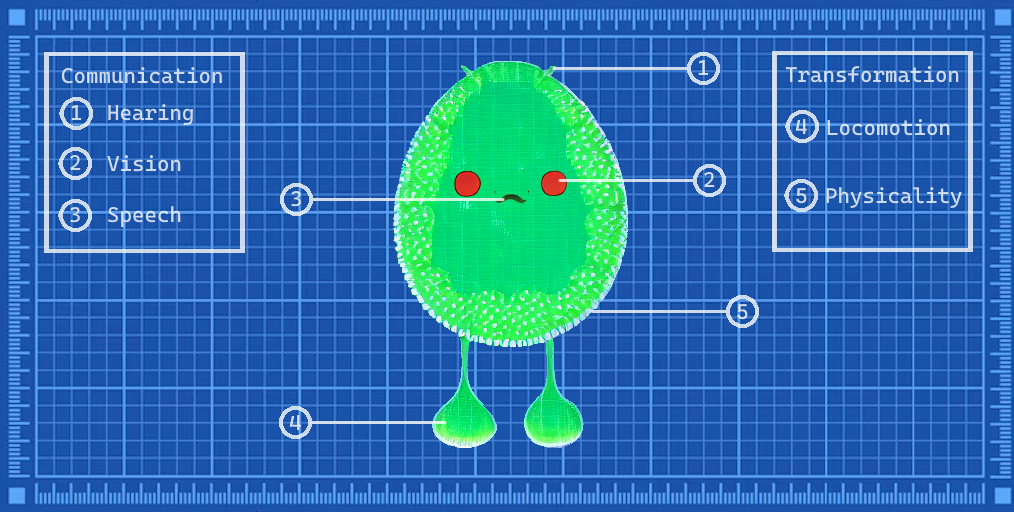

It’s useful to further break down this “mechanics” category into what entities can understand (communication) vs what they can change directly (transformation). These intelligent NPCs have greatly increased communication abilities, but the way they can transform the game world is still very limited. The ability to generate code provides the complementary capability for conversations to have profound, tangible, and lasting impact on the game world after these conversations conclude.

Jerraphor: Well, we’ve seen the player, through their actions, change the course of a game and that was way before anyone talked about generating code! I still don’t see the reason why this code generation is needed.

You’re right, there have been many games that have utilised the existing, “parametric” or “rule-based” personalisation systems to great success. Historically, story-driven games have been largely constrained to creating branching story paths (i.e. multiple-choice options in dialogue) to ensure the quality of each outcome, although even there we’ve begun to see some attempts at procedural narrative (e.g. the fantastic Wildermyth).

So, to make the comparison between the old and the new more interesting, let’s focus on sandbox games, whose champion is inarguably Minecraft. Minecraft’s infinite, varied world has been a big part of the game’s long-lasting success. And yet, for experienced players, it no longer holds that sheen of wonder, that spark that keeps you guessing what’s around the corner. This, the conjecture goes, is due to a limitation of previous technology: the need to specify the procedural generation of the world in a parametric manner, where the world creation process represents a static assembly line, which has various knobs that regulate its operations. In this analogy, code generation allows you to construct a new, bespoke creation process on the spot.

For another example, let’s go back to one of the games of all time, Spore. I personally enjoyed it immensely the first time around, but when I came back to it, I noticed some things that made me understand its mixed reviews. In Spore, you would create various layers of civilization that fed into each other (what I think is a genius, under-explored idea to this day). However, once a civilization stage was left behind, the only thing you’d carry over is either visuals, or, on the gameplay side, some basic stats. That’s because what you needed and wasn’t available at the time was the ability to adapt the game mechanics to the player creation. The way to do that is by generating code.

Finally, one more relevant concept worth noting is that of “interoperability”, which often comes up in the context of talks of the Metaverse (web3 is almost always brought up here as well, which I think is confounding the underlying concepts). Right now, there are services that can create player avatars that transfer over between different worlds. Building on top of this, people have also spoken about the ability to “make a gun in Call of Duty and shoot it in Battlefield”, but this requires Activision and EA to come to an agreement for a “best FPS game architecture”, something that is unlikely to happen soon. Where we see a way forward in this is to flip the problem on its head and ask “Can you take a Call of Duty weapon and make it work in Battlefield on the go?”. More to come on this front in the future.

I’ve been recently imagining a convenient discussion opponent (let’s call them Jerraphor), challenging our studio’s decision, in the current vast world of possibilites, to go towards code generation in particular. This post will proceed to argue against their well-crafted, totally-not-conveniently-simplified points.

This post focuses on how technology can enable new kinds of games, rather than how it will affect the pre-established pipelines for game development.

I’ve been recently imagining a convenient discussion opponent (let’s call them Jerraphor), challenging our studio’s decision to, in the current vast world of possibilites, go towards code generation in particular. This post will proceed to argue against their well-crafted, totally-not-conveniently-simplified points.

Jerraphor: We’ve been seeing lots of examples of generating textures and visuals using diffusion models, and doing this on-the-go should give us infinite game variations, right?

An infinite amount is correct, and yet we’re certain that will get old quickly. The concept of “reskinning existing games” has been a topic of conversation in gaming for a long while, and with good reason. Ignoring the problem of the blatant plagiarism this reskinning enables, it allows the original developer of a game to add, for example, seasonality to their game through festive makeovers. While that may bring value to an existing audience, it almost always lacks substance, and trying to sell this variant as an independent game puts players off like nothing else. While visuals may draw people in, what players stick around for are the game mechanics, and you cannot vary those purely through a new visual style.

So, then, rather than focusing on how things look (visuals), let’s dive into how they interact with each other (mechanics).

Jerraphor: Okay, I can buy that visuals alone aren’t enough. But what about those intelligent NPC demos? They’re really impressive!

Now we’re getting into the meat of it. Like for many others, seeing NPCs that respond like humans, in real time, has been a dream since I was a kid. Those initial few minutes where you feel like you’re truly connecting to a character feel magical.

But then the conversation ends and what inevitably sets in is the lack of consequences of that interaction. We go back to the same game world, as if that conversation had never happened in the first place. Some recent games have cleverly managed to hide this fact by discretizing these interaction episodes, or by letting the conversation affect the world in very predetermined ways, but once the novelty of this mechanic wears off, the lack of integration depth becomes very apparent.

It’s useful to further break down this “mechanics” category into what entities can understand (communication) vs what they can change directly (transformation). These intelligent NPCs have greatly increased communication abilities, but the way they can transform the game world is still very limited. The ability to generate code provides the complementary capability for conversations to have profound, tangible, and lasting impact on the game world after these conversations conclude.

Jerraphor: Well, we’ve seen the player, through their actions, change the course of a game and that was way before anyone talked about generating code! I still don’t see the reason why this code generation is needed.

You’re right, there have been many games that have utilised the existing, “parametric” or “rule-based” personalisation systems to great success. Historically, story-driven games have been largely constrained to creating branching story paths (i.e. multiple-choice options in dialogue) to ensure the quality of each outcome, although even there we’ve begun to see some attempts at procedural narrative (e.g. the fantastic Wildermyth).

So, to make the comparison between the old and the new more interesting, let’s focus on sandbox games, whose champion is inarguably Minecraft. Minecraft’s infinite, varied world has been a big part of the game’s long-lasting success. And yet, for experienced players, it no longer holds that sheen of wonder, that spark that keeps you guessing what’s around the corner. This, the conjecture goes, is due to a limitation of previous technology: the need to specify the procedural generation of the world in a parametric manner, where the world creation process represents a static assembly line, which has various knobs that regulate its operations. In this analogy, code generation allows you to construct a new, bespoke creation process on the spot.

For another example, let’s go back to one of the games of all time, Spore. I personally enjoyed it immensely the first time around, but when I came back to it, I noticed some things that made me understand its mixed reviews. In Spore, you would create various layers of civilization that fed into each other (what I think is a genius, under-explored idea to this day). However, once a civilization stage was left behind, the only thing you’d carry over is either visuals, or, on the gameplay side, some basic stats. That’s because what you needed and wasn’t available at the time was the ability to adapt the game mechanics to the player creation. The way to do that is by generating code.

Finally, one more relevant concept worth noting is that of “interoperability”, which often comes up in the context of talks of the Metaverse (web3 is almost always brought up here as well, which I think is confounding the underlying concepts). Right now, there are services that can create player avatars that transfer over between different worlds. Building on top of this, people have also spoken about the ability to “make a gun in Call of Duty and shoot it in Battlefield”, but this requires Activision and EA to come to an agreement for a “best FPS game architecture”, something that is unlikely to happen soon. Where we see a way forward in this is to flip the problem on its head and ask “Can you take a Call of Duty weapon and make it work in Battlefield on the go?”. More to come on this front in the future.